It would be great if the Navy could identify and prevent all hazards and invalidate Murphy’s Law. The July 2020 USS Bonhomme Richard (LHD-6) fire investigation revealed a series of root causes, from poor training, learning, and watchstanding to inadequate hazardous-material management on board that too many officers and enlisted knew about before the mishap. While the Navy uses the investigation report to address the root causes, it should reassess its operational risk model. Regardless of how the Navy or safety professionals view the findings, the Bonhomme Richard fire revealed a series of mathematical flaws in the Swiss Cheese Risk Model (SCRM) unknown to most commanders and leaders.

The SCRM

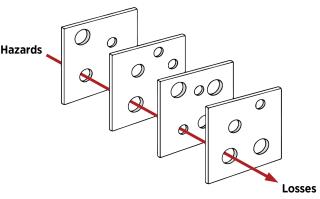

British psychologist James T. Reason introduced the Swiss Cheese Risk Model, which suggests that when defenses are layered one on the other, the risk of the holes aligning to allow a catastrophic loss or incident are lower. Nevertheless, sometimes the holes (or weaknesses) do align (see figure 1).1 Reason’s model indicates that command systems could prevent hazards by reducing or misaligning holes to prevent a direct flow of risks. In a nutshell, a system can achieve a misalignment of holes not only by design, but also with new or existing barriers—what many organizations identify as institutional safeguards, instructions, regulations, standards, policies, and so forth to guide their operations safely.

The Navy’s operational risk management (ORM) process for risk analysis and management reflects the vision of Reason’s SCRM.2 Like many other industries (manufacturing, healthcare, aviation, etc.), the Navy’s version of the SCRM is used for etiology (causation), investigation, and prevention of safety hazards.3 Hence, the SCRM model offers managers the means to create barriers in the form of slices, layers, or decks designed to mitigate systemic failures.

While none of the SCRM layers are without risk of loss, they significantly reduce risks when taken as a multilayered approach. A review of a schematic representation of the growth of the Bonhomme Richard fire provides a parallel to the Swiss Cheese Risk Model. Repeated failure to contain the fire permitted spread through several physical layers, much as the SRCM shows risk traveling through holes in layers of safeguards. This also begs the question of why so many administrative and physical risk mitigation layers failed, which indicates a weakness of the model.

Why Is This a Problem?

Several aspects of the SCRM theory are often misunderstood, and many authors have dismissed the model for oversimplification. In contrast, others have attempted to modify it to better equip it to deal with complex organizational errors.4 For instance, despite physical mitigations, the Bonhomme Richard fire showed that the decks (cheese slices) did not prevent the fire from spreading to adjacent compartments. Meanwhile, the findings of the Navy’s July 2021 Major Fires Review show a series of potential activities pre-casualty that could prevent casualties when an individual system does not work as designed. The Bonhomme Richard’s multilayered decking system provided a false sense of safety regarding ORM steps, as they did not provide the expected level of effectiveness in stopping the spread of fire.

Reason’s model suggests that many failures must align to trigger a hazardous event, which implies that a series of failures aligned on board the Bonhomme Richard not only to start the fire, but also to prevent it from being contained expeditiously. It is undoubtedly with this perspective that the Vice Chief of Naval Operations asked fleet commanders:

Why actions put in place following significant shipboard fires, such as the implementation of reference (b) [NAVSEA Technical Publication S0570-AC-CCM-010/8010: Industrial Ship Safety Manual for Fire Prevention and Response (8010)] of the NSC letter and related guidance did not sustainably achieve the desired outcome? Why appropriate unit-level standards were not consistently sustained relative to material control, cleanliness, and fire-response readiness?5

After-action reports—including in the case of the Bonhomme Richard fire—point out many actionable items to prevent mishaps. Not surprisingly, after-action reports address independent failures that align in series to permit an incident (as the model predicts). The Major Fires Review shows how flaws permeate system mitigations. The identification of flaws starts with addressing core issues within the organization to reveal how individual risks within a given system are interrelated. Whether it is a command climate, operational tempo, or other underlying factors, a system’s behavior is often tied, at least in part, to some unifying factor. For instance, the failure to accomplish watertight door maintenance because of a bad command climate could have a negative affect on the reliability of such doors to protect adjacent spaces from catching fire. This means that the loss of one system makes it more likely that the subsequent system will fail.

Conditional Probability

Conditional probability best accounts for this type of failure, describing quantitative changes in the likelihood of a catastrophic event, given that something has already happened.

For example, we could assess that material condition Yoke would prevent a fire from spreading with a certain degree of effectiveness, say 90 percent. Notwithstanding condition Yoke, we could also assume a watchstander would find and respond to fire with 90 percent effectiveness. Under traditional probability analysis, we could assess that these two mitigations together would yield a 99-percent success rate in mitigating fire spread. However, suppose an underlying factor, such as inadequate training or poor standards, ties these two issues together. In that case, the two items may have a dependent relationship, and the probability that a watchstander is inattentive may be more likely if there is poor adherence to material condition standards. Statistically, considering P(B|A) as the probability of B given A, what is the chance that B happens if A has occurred? Under this probability, the likelihood a watchstander will fail to contain the initial fire if there are existing problems with material conditions will increase the chances of an uncontained fire.

Hence, in a multilayered risk-mitigation model such as the SCRM, the layered conceptualization shows a meaningful change in observed risk between it and conventional models. Based on the presumption of independence and a model that recognizes conditionality, we could analyze an event in which three mitigating circumstances could prevent a catastrophic event. With no dependency, there is a remarkably elevated level of safety, with only one devastating failure in more than 12,000 occurrences. However, as dependency between mitigations increases, we can predict a rapid decrease in the number of events between failures. With even low dependency among factors, the level of risk can quickly become untenable.

Military officers have the training to assess risks, balance risk and mission, and use data to make decisions. Although the SCRM is conceptual rather than quantitative, it aids in understanding the quantitative nuance to ensure that the concept can be applied sensibly without fully understanding associated risk profiles. Suppose commanding officers and fire marshals realize the layers of the cheese cannot provide 100-perecnt protection.6 In that case, they will be able to implement more thorough mitigations and better define acceptable risk levels.

The Future of ORM

The SCRM model’s effectiveness depends on individual and organizational understanding. A failure to grasp exactly how mitigations or slices limit or permit risk will lead to reduced applicability of the framework and acceptance of incorrectly assessed risk.7 Many argue that the SCRM is a simple, general framework to communicate risk to broad groups of people. This argument focuses on positive systems mitigation by contending that differentiation between independent and dependent systems does not affect the model’s effectiveness at driving individual focus on mitigation layers.

While we do not argue with the benefit of a simple model with which to communicate risk, leaders also cannot ignore the shortcomings. The bottom line is that, although the SCRM model does not inherently quantify risks, military leaders will analyze images to make conceptual connections the model represents. If leaders believe that risk-profile failures are independent of one another, they will be less attuned to potentially catastrophic events in which many levels of protection are in place.

Using the SCRM without refinement could instill a dangerous false confidence and risk tolerance by failing to draw sufficient attention to the weakness of casualty-prevention systems. As a result, this will further amplify any activity’s inherent risk, and leaders need to recognize that absence of events does not validate the suitability of risk-mitigation measures.

1. Justin Larouzée and Jean-Christophe Le Coze, “Good and Bad Reasons: The Swiss Cheese Model and Its Critics,” Safety Science 126 (June 2020).

2. Department of the Navy, OPNAV Instruction 3500.39D: Operational Risk Management (Washington, DC: Office of the Chief of Naval Operations, 29 March 2018).

3. Justin Larouzée and Frank Guarnieri, “From Theory to Practice: Itinerary of Reason’s Swiss Cheese Model,” ESREL (September 2015): 817–24.

4. Douglas A. Wiegmann, Laura J. Wood, Tara N. Cohen, and Scott A. Shappell, “Understanding the ‘Swiss Cheese Model’ and Its Application to Patient Safety,” Journal of Patient Safety 18, no. 2 (March 2022): 119–23.

5. Commander, U.S. Fleet Forces Command/Commander, U.S. Pacific Fleet, Major Fires Review (15 July 2021).

6. Thomas V. Perneger, “The Swiss Cheese Model of Safety Incidents: Are There Holes in the Metaphor?” BMC Health Services Research 5, no. 71 (9 November 2005).

7. James T. Reason, Erik Hollnagel, and Jean Pariès, “Revisiting the Swiss Cheese Model of Accidents,” Journal of Clinical Engineering 27, no. 1 (January 2006): 110–15.