Imagine this future scenario: The United States is on the brink of war with a hostile state threatening national security. But we have a military option that will not risk the life of a single American Soldier and will protect countless innocent civilians. The President can deter nuclear war and avoid a bloody invasion with a single order: Send in the drones.

Unmanned fighter jets launch from aircraft carriers and conduct bombing runs on the enemy’s military infrastructure. Autonomous ground vehicles seek out and destroy their forces, which have already been tracked remotely by satellite. At sea, as the enemy launches a submarine campaign against merchant shipping while mining critical waterways, a network of autonomous undersea vehicles responds by locating submersed threats and strategically self-destructing to neutralize the danger.

As a last-ditch effort, the enemy launches its entire arsenal of nuclear weapons—but the missiles are immediately knocked out of the sky by directed-energy lasers, before their warheads are armed. When the conflict moves into a counterinsurgency phase, specialized drones—equipped with facial-recognition technology, electronic-warfare and improvised-explosive-device-detection capabilities, and offensive weaponry—disrupt and dismantle the rebellion to clear the way for security forces.

When the smoke clears, the campaign is hailed as one of the most decisive and humane victories in the history of warfare. Zero American casualties, and zero innocent civilians killed. The advantages of such a campaign are clear, but many technical questions need to be addressed before the U.S. military can fight a war almost entirely with robots.

Still, the most important concerns are not the technical problems involved with robotics design and development. Talented engineers, technicians, and computer programmers are finding solutions on a daily basis. The military must first assess the impact of robotics on tactics before it can effectively wield such a revolutionary weapon.

Who will be the tacticians in robotic warfare? When and where will tactical decisions be made? How will the tactics change? Why is robotic warfare really being pursued? The answers to these questions illuminate the path to victory in this type of warfare. But without sufficient control and understanding of the tactical paradigm shift, the results of robotic warfare could be far worse than the scenario described here.

Who Is the Tactician in Robotic Warfare?

In conventional warfare, a major offensive requires a multitude of tacticians, including aircraft, ship, and ground commanders. Tactical decisions are made on the spot by all grades and ranks of warfighters as well. In general, the tactical decision-making process of this military involves closely linked decisions and actions. Rarely is there any ambiguity as to who makes tactical decisions.

In robotic warfare, the tactician is not so obvious. Different levels of automation introduce different decision-makers into the process. At the lowest level of automation, remotely piloted vehicles (RPVs) are controlled directly by human operators. Currently, most RPVs are aircraft, so the operators are predominantly pilots. As the use of robots becomes more prevalent, however, non-pilots will increasingly have tactical control of robots. As actual combat experience becomes rarer, these newcomers to the tactical process will have to rely on their training to ensure their combat effectiveness.

At the highest level of automation, i.e., artificial intelligence (AI), the robot itself would be the tactician (and possibly the strategist). However, the potential military application of AI involves complex issues that are beyond the scope of this article. Slightly less-autonomous robots—which are programmed to make tactical decisions on their own without the need for a human operator—are rapidly gaining in both popularity and technological maturity. In this case, the tactician is neither the operator nor the robot, but the programmer. Given a particular scenario or set of inputs, code within the machine will predictably produce the outcome the programmer intended.

But without a solid foundation of tactical expertise, the commander’s intent could give way to the programmer’s intent. Regardless of the level of automation, robotic warfare will rely on the tacticians, whoever they may be, just as much as does conventional warfare.

Where Is the Tactical Decision Made?

Robotics will change more than just who makes decisions in warfare. Such a revolutionary capability can impact the battlespace itself. For example, when soldiers acquired rifled gun barrels in the 19th century, their accuracy increased so much that they were able to decimate advancing armies from a safe distance. The battlefield was forever expanded and dispersed. In the early 20th century, the advent of submarines introduced an entirely new dimension to the maritime battlespace.

Similarly, robotics will forever change the tactical nature of the battlespace. In robotic warfare, the critical point of decision in the tactical process is moved physically farther from the point of action than ever before. In the case of RPVs—robots with no autonomy—the tactical decision-maker will not be on the battlefield, or even necessarily in the theater. He or she will be in a secure control center half a world away, far removed from combat both geographically and psychologically.

This vast separation between decision and action requires tremendous mental discipline and concentration. Already examples of the contrary are producing disastrous results. In October 2009, an Air Force Predator drone crashed into a mountain in Kandahar, Afghanistan, because the flight crew, who had just taken the watch at an air reserve base in California, was preoccupied with a major battle occurring below.1 When unmanned aerial vehicles crash, the fact that no pilot was killed is often highlighted. In this case, however, the distributed nature of the command system and lack of close tactical control contributed to the disaster. An onboard pilot may have been fatally injured in the crash, but it is hard to believe that he or she would not have been able to avoid that mountain in the first place.

A far more tragic example occurred in February 2010, when an Army helicopter killed 23 Afghan civilians after an Air Force Predator drone crew based in Nevada incorrectly identified them as insurgents. In the investigation report that followed, Major General Timothy McHale condemned the crew’s communication as “inaccurate and unprofessional” and stated that vital information, such as the presence of children, was downplayed to the ground commander. Additionally, McHale cited a lack of “insights, analysis, or options” from command posts in Afghanistan to the ground commander.2 The Predator drone crew, as well as analysts at the in-country command posts, failed to hold themselves to the high standard required by combat operations.

Even with experienced pilots and tacticians controlling RPVs, overcoming the challenges introduced by the geographical separation of decision and action remains difficult. Yet the military is increasingly training non-pilots to operate the aircraft. Furthermore, mission operators are frequently junior personnel—most likely lacking combat experience as more and more robotics are used—whose training is focused on the technical aspects of operating the machine.

To be effective in the long term, they must be given the extensive tactical training given to the aircraft, ship, and ground commanders of today’s military. Manpower and training are not areas of cost savings, particularly in the context of RPVs. It is already becoming evident that the tacticians of robotic warfare require at least the same standard of training—and compensation—as do tacticians of conventional warfare. The expanded battlespace resulting from the separation between point of decision and point of action only raises that standard.

When Is the Tactical Decision Made?

On the other end of the autonomy spectrum from RPVs are autonomous robots capable of making tactical decisions on their own. The military application of these robots continues to gain interest because of their ability to process information much more abundantly and rapidly than can a person. While a human operator is just starting the tactical decision-making process, a robot evaluates millions of possible scenarios and selects the decision that produces the best outcome. This level of automation has the potential to mitigate risk by quickly and decisively waging war, while minimizing the probability of error.

But there are consequences for using fully automated robots. They do not have AI, but rather they are preprogrammed with tactical decision-making capabilities that enable them to operate in combat without direct control from an operator. Essentially, the decisions themselves are made in advance by skilled programmers who anticipate a wide range of stimuli.

This dynamic is fundamentally no different from that of a chess supercomputer designed to compete against a grandmaster. For example, IBM’s Deep Blue defeated world champion Garry Kasparov in 1997 by processing 200 million positions per second, analyzing a database of historical master games, and using algorithms that model master chess play.3 Since all of its decisions came from code written by IBM’s team of programmers and grandmasters, Deep Blue’s moves could, theoretically, have been predicted by examining the code within the machine. Nevertheless, Deep Blue was able to defeat a world champion by combining processing power with tactical expertise. IBM’s victory is often credited to “brute force,” i.e., the computer’s ability to quickly consider every possible scenario up to 20 moves deep. However, without the grandmasters’ tactics, which were decided before the match was played, Deep Blue would have been doomed to failure against a world-class opponent like Kasparov.

Interestingly, as processing technology advanced over the next decade, the focus in computer-chess research actually shifted from processing power to chess tactics. In 2006, a PC-based chess program capable of processing only 8 million positions per second defeated world champion Vladimir Kramnik.4 Victory was achieved through advanced heuristics and algorithms, not raw processing power.

Just as IBM invested in the tactical expertise of chess grandmasters, the military must invest in experienced tacticians and tactical training for computer programmers. The job of the tactician here is extremely difficult, because not only must the decisions be made far from the battlefield, they must also be made long before the battle even begins. Every line of code must be written with an eye toward the tactical outcome it is intended to produce.

The programming foundation must be of the highest quality, because any robot, short of AI, lacks one of the most valuable weapons in the history of warfare: improvisation. While warfighters have always learned to adapt to unexpected situations and overcome adversity, in robotic warfare the outcome is only as good as the programming.

Injecting a human supervisor into the decision-making process does allow some tradeoff between decision quality and time management. A supervisor can safeguard against obviously poor decisions much closer to the point of action, but the advantage of near-instantaneous decision-making is lost. In the heat of battle, that tradeoff may not even be an option. In any event, most decision-making still occurs before the battle. If an assistant had moved the chess pieces for Deep Blue and only intervened if the computer was playing into a checkmate, would the assistant have been crowned world champion?

How Will Robotic Warfare Change Tactics?

By focusing on the people involved in robotic warfare, the military can successfully navigate the changing tactical landscape in pursuit of victory. Excellence through tactical training will provide a compass, but the exact route will be discovered over time through experience and ingenuity. While specific tactics in robotic warfare are yet to be determined, tactical principles and the unique capabilities of robotics point to some potential areas of focus for research.

• Resistance to radiological, biological, and chemical warfare: The effects of these attacks, while often fatal to human warfighters, pose little danger to robots. While civilians are susceptible to these types of weapons, robotic warfare can diminish their utility.

• Distributed command: In conventional warfare, the loss of a battlefield commander can introduce chaos and lead to defeat. In robotic warfare, there is no such commander for the enemy to target. Even if a commander is using one particular robot and it is destroyed, the command function can be transferred to the next available machine.

• Removal of human emotion on the battlefield: Even the most courageous warfighters take cover from suppressive fire or suffer shellshock from nearby explosions. Human nature has always governed the dynamics of battle, but robots are not subject to emotions such as fear and intimidation. Conversely, an army of unwavering advancing robots can be a daunting sight to an enemy force.

Not only will robotic warfare change the tactics of the military with the machines, but the military facing them will also be forced to reevaluate the way it fights. Just as robots will precipitate changes in tactics based on their capabilities, certain vulnerabilities will provide opportunities on which the enemy can focus its tactics.

• Electromagnetic pulse: The radiological effects of nuclear weapons may not be harmful to robots, but another side effect—electromagnetic pulse—could be detrimental. A nuclear weapon’s ability to damage and/or destroy electrical systems could make it the preferred method of attack against a robotic military.

• Cyber warfare: Though still maturing, cyber warfare is more advanced than robotic warfare, and since robots depend heavily on computer networks, they will be a prime target. By infiltrating key networks, an enemy could quickly debilitate large numbers of robots or, much worse, hijack them and turn them against friendly targets.

• Psychological operations: Enemies will be able to capitalize on any mistakes made by robots, especially the killing of innocent civilians. By painting robotic warfare as heartless and cowardly, enemies can rally public opinion—both local and American—if it is not conducted at the highest standards.

• Targeting of command centers: Command centers and any other command-and-control nodes will become prime targets because, aside from their traditional value, without them many robots will cease to function. One attack on a command center can effectively defeat thousands of robots on the battlefield. With many such centers located on American soil, attacks on the homeland will become more common.

Why Is Robotic Warfare Being Pursued?

To determine if victory can even be achieved primarily using robots, the motivation behind this type of warfare must be clarified. What capability gaps are these machines intended to fill? Obviously, the introductory scenario represents an unattainable ideal of zero-error warfare; however, the capabilities described can approach that ideal. Furthermore, all the technologies in the scenario are either being researched or currently fielded. The potential for risk mitigation to combat personnel is already displayed by the unmanned systems in operation today, while advancements in computer-processing power continue to reduce the probability of collateral damage. Nevertheless, several other motivations for developing robotic-warfare capabilities are often cited. These include the following.

Some believe that unmanned vehicles could reduce manpower requirements. On the contrary, the military is learning that robotic warfare only displaces the manpower requirements. A typical drone crew consists of a pilot, a camera operator, and an analyst—not to mention a distributed network of analysts and controllers to ensure that raw data is relayed and processed into relevant intelligence.5 Only when the military is willing to hand over tactical control to fully autonomous robots will manpower requirements truly be reduced.

Another reason for which robotic technology is often touted is the improved mission profile allowed by not having a human located on the platform. For example, removing the person alleviates the constraint on mission duration set by human fatigue and frees up around 200 pounds of payload. Yet these factors should be considered benefits, not motivators, of robotic warfare. Since reliability and maintainability of combat aircraft, particularly in hostile environments, have not fundamentally improved just by removing the pilot, failure rates will still affect mission endurance.

Meanwhile, if additional payload were the prime motivator, the military would have invested at least $50 million per pound of payload per platform, based on a conservative estimate of a $10 billion total DOD investment in unmanned vehicles to date.6 This seems a high price to pay.

So, while robotics may offer myriad tactical benefits, their capability to mitigate risk to American warfighters and civilians should provide the central motivation for their advancement. Eventually, when the technology has sufficiently matured, victory achieved through the use of robotics will not only be possible, but plausible. Robotics can have a game-changing impact on par with guided munitions or nuclear weapons. Enemies of the United States have learned over the past few decades that sapping the American public’s will to fight can be an effective strategy. But if they are faced with an assault that effectively eliminates the hazard to American soldiers and innocent civilians, enemies will surely question that strategy.

What’s the Worst That Can Happen?

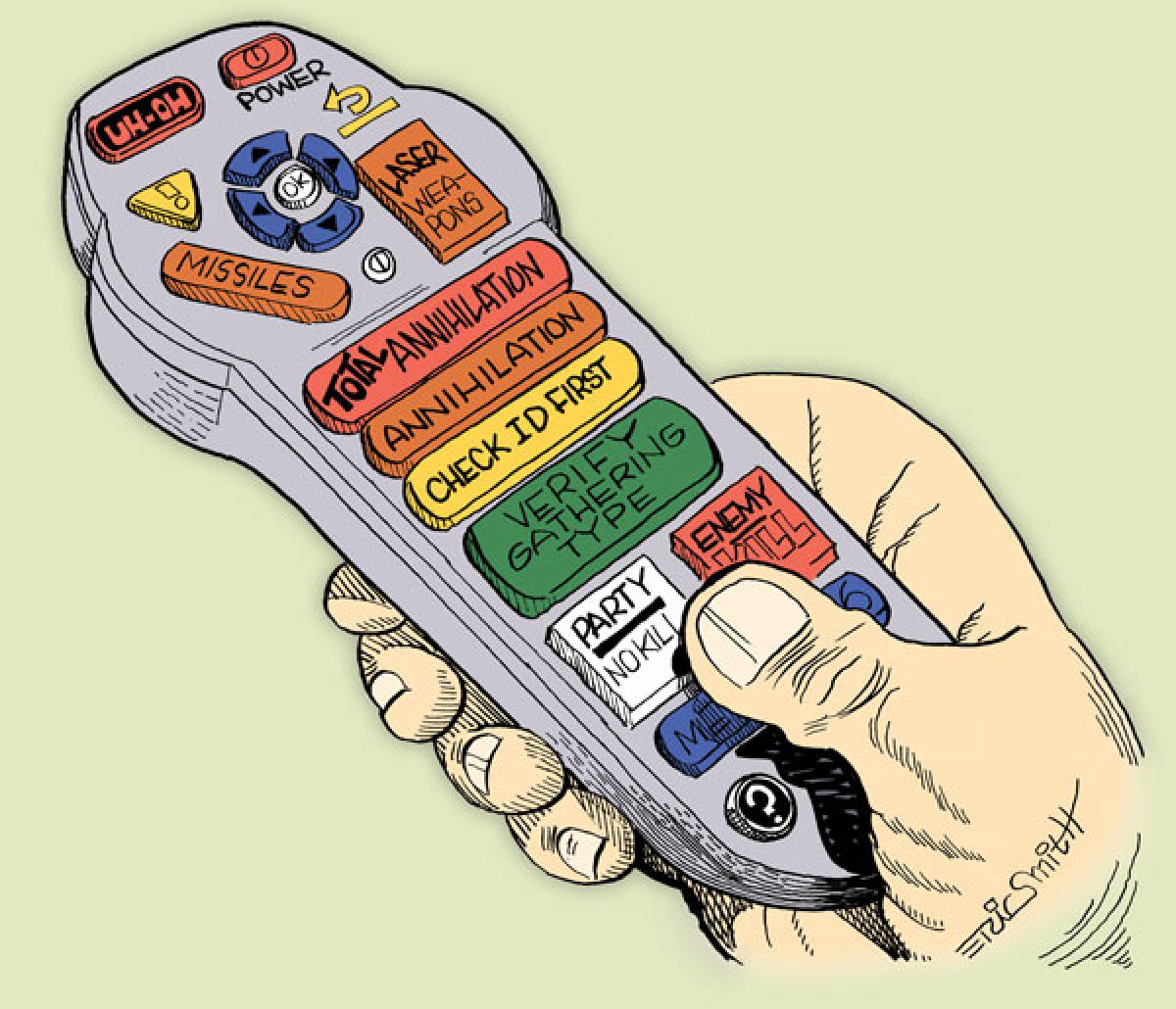

If the military does not grasp control of the tactical challenges of robotic warfare, the results could be disastrous. Consider a different scenario of robotic assault from the one introducing this article. This time, operators of unmanned fighter jets fail to fly tactical ingress and egress routes. Their lack of tactical expertise makes their aircraft easy targets for enemy air defenses. Autonomous ground vehicles cannot overpower unscathed enemy air and ground forces, necessitating a conventional invasion. At sea, an American warship is sunk by an autonomous undersea vehicle whose command-and-control network has been taken over by enemy hackers.

Counterinsurgency drones massacre an entire village during a festival, because they mistake costumed civilians for combatants and fireworks for gunfire. Instead of launching nuclear missiles, the enemy detonates its nuclear weapons within its own borders to incapacitate the remaining robots. Hundreds of thousands of innocent civilians are killed in the process. Aside from the military defeat and terrible loss of human life, much of the capital invested in robotic technology would have been wasted in this scenario, because the American public would never again condone robotic warfare.

In fact, the acceptable limits of error will be much lower in robotic warfare. The rapid technological advancement of the past several decades has shown that as the margin of error diminishes, so does the user’s tolerance for error. In the public’s mind, new achievements become standards, and goals become expectations. It will be the responsibility of tacticians to meet those expectations.

With all of the revolutionary implications of robotics in warfare, they are still just like any other weapon in one very important respect. As with any weapon, the true power of robotics ultimately comes not from the capability of the weapon itself, but from the warfighter’s ability to effectively wield that weapon.

1. “UAV Crash Blamed on Distraction of Battle,” Air Force Times, 5 April 2010.

2. David Zucchino, “U.S. Report Faults Air Force Drone Crew, Ground Commanders in Afghan Civilian Deaths,” Los Angeles Times, 29 May 2010.

3. Monty Newborn, Kasparov versus Deep Blue: Computer Chess comes of Age (New York: Springer, 1997).

4. “Chess Champion Loses to Computer,” BBC News, 5 December 2006, http://news.bbc.co.uk/2/hi/europe/6212076.stm.

5. Zucchino, “U.S. Report Faults Air Force Drone Crew.”

6. Government Accountability Office, Defense Acquisitions: Opportunities Exist to Achieve Greater Commonality and Efficiencies among Unmanned Aircraft Systems (Washington, DC: GAO, 2009).