Before an autonomous machine kills the first human on the battlefield, the U.S. military must have an ethical framework for employing such technology.

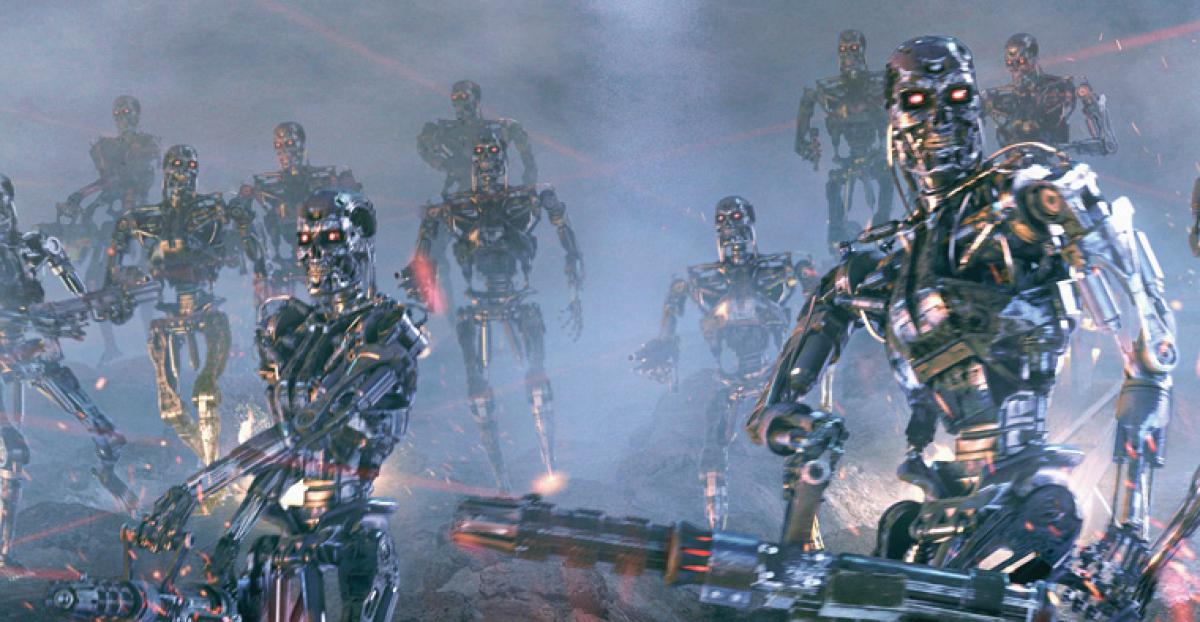

Artificial intelligence (AI) has many peaceful uses, but its teaming in combat is a contentious issue among leading scientists including Stephen Hawking, who has called for a ban. Meanwhile, efforts continue to develop autonomous systems for delivering supplies in the form of automated supply trucks, tanker planes, and unmanned harbor sentry vessels. These uses can free sailors and soldiers from logistic and support missions, making more people available for combat roles.1 Eventually, however, AI will be employed on the battlefield, and we must begin preparing for that day now. The consequences of autonomous systems in combat take on new dimensions, in a future toward which the U.S. military is accelerating with each new technological advance.

Robots already perform roles that distance the human operator from the dangers of explosive ordnance disposal and scouting or reconnaissance missions. Over the past 15 years, intellectual capital and resources have been galvanized to advance unmanned systems with increasing autonomy, due in large part to the parallel wars in Afghanistan and Iraq. With a stretched military, President Barack Obama called for innovation as part of the 2010 National Security Strategy. Businesses and academics responded with such energy that today, technological advances are forcing political and military leaders to confront fundamental questions about robotic weapons. Advances in AI inevitably will produce truly autonomous learning machines.

We do not yet have the military doctrine, training, and technological safeguards for employing learning machines. Existing legal frameworks can address some of the concerns,2 but they will not prepare us for a new brand of fog of war that stems from the uncertainty inherent in AI. It is critical and unavoidable that we employ this fast-developing technology effectively, if only because our competitors are investing in learning machines.

Legal Considerations

Aspects of domestic and international law provide a starting point from which to address the challenges of AI in combat. Because these systems provide the ability to get inside an enemy’s observe-orient-decide-act (OODA) loop, they could greatly reduce the cost in human life—if the criteria consulted before engaging in war (jus ad bellum) are properly met.3 This is not merely a legal exercise. International adherence to the rule of law has helped to ensconce U.S. global power and prosperity.4

Learning machines must pass the same tests as any other new weapon: their use must be necessary, discriminate, and proportional against a military objective.5 Most challenging is discrimination, which refers to the requirement to discern noncombatants from targeted combatants and to limit collateral damage.6

Armed drones such as those used against al Qaeda in Yemen and Afghanistan have existed for decades and could have operated much more autonomously. But humans have kept themselves firmly in the decision loop. Today’s drones can detect and classify as hostile a target from a surveyed area, fly autonomously to a selected point, and employ a growing range of weapons. Making the conceptual leap from human-guided, remote weapons to self-directed combat machines implies a level of precision and accuracy that has not yet been achieved—but that quickly is being developed. Such advancements require improvements in sensors, energy, and weapons. The most marked developments, however, will come with advances in AI programing and processing speeds.

Designing Programs Ethically

How we conceptualize AI programming today has ramifications for the future. Already common in software for complex systems, the technology depends on subroutines (narrowly focused operations that programmers can move easily between applications) with inherent backdoors and weaknesses. It is a problem that also dogs cyber warriors concerned with safeguarding information networks.7 Because design decisions made today become tomorrow’s legacy, the operator must understand this complexity to properly employ learning machines.

An early branch of AI popular in the late 1980s and early 1990s was called fuzzy logic, a term that represents a potential loss of programming control and liability. If we accepted fuzziness, we might produce machines that could assess a situation rapidly and significantly limit collateral damage, thereby arguably making war more humane.8 But what if a robot mistakenly kills an insurgent who had been firing his weapon but now has his hands in the air and is trying to surrender? Aside from being inhumane and unethical, killing people in such situations also is counterproductive. To be trusted in combat, these machines must be able to make correct judgments nearly 100 percent of the time, which means they will be held to a higher standard than human combatants.

Safeguarding the network and communications necessitates protecting their protocols, frequencies, and encryption. Like the crews of ships and aircraft, as well as individual soldiers, machines will remain in contact with the chain of command. This communication usually will occur with a human operator, but in the case of swarm tactics, human control will be minimized because less communication can mitigate the threat of cyber or electronic attacks.9 Along with the need to stay inside an adversary’s OODA loop, this will drive future designs toward increasing autonomy while minimizing communications.10

Safely staying inside an adversary’s ever-quickening OODA loop will depend on technological developments in sensors, but even more so on advancements in computer processing power. As we attempt to teach machines to employ rules of engagement, a new aspect of the fog of war will emerge through the inclusion in conflict of a new type of uncertainty.

Humans Are Always in Charge and Liable

Once the robots achieve adequate reliability to execute rules of engagement, concern will shift to issues of liability when a machine kills the wrong person or causes unnecessary collateral damage. This multifaceted unease stems from system reliability, unexpected environmental changes and impact to a machine’s sensors, and weaknesses in the training of those who control the technology.11 Leaders will need to set clear parameters for the use of these systems.

Characteristic of such complex programs is brittleness, the possibility of a flaw in one subprogram or sensor layered into a larger system, which causes a catastrophic failure.12 To weed out errors inherent in such a complex process, prototypes must undergo a prolonged testing period—which, because of the nature of learning machines, is likely to remain an open-ended process.

Another source of concern is the threat of cyberattacks through backdoors during the production of microchips, and infiltration of the network in which these machines operate.13 To prevent this problem, certified and nationally controlled facilities likely will be needed, especially for those parts associated with the kill decision. This involves sensor data processing, target validation, and engagement order.

Beyond production flaws, liability for operational mistakes must rest with the person charged with the proper employment of learning machines. This liability necessitates training those who use such systems to evaluate the situation for appropriate use and control of safeguards on the “independent learning” of the systems. Such knowledge is vital and must be a prerequisite of certifying a cadre of learning machine professionals.14

Determining whether a criminal act was perpetrated by the human operator or designer of a learning machine often requires establishing ill intent—which is complicated by AI’s ability to solve problems without human intervention.15 Because AI-created outcomes would be unpredictable and causality impossible to prove, this situation has the potential of creating a legal fiction without accountability.16 However unlikely, ill intent of an AI system would be contingent on it having the equivalent of human emotions, so that it would be able to understand the ethical consequences of its actions. While learning machines are coming and soon, it is highly unlikely that a machine with human-type empathy could be built; therefore, humans cannot be totally removed from liability. Because the employment of deadly force necessitates an ethical question and AI is nonethical, a human always must decide and be liable for these machines.

Misidentification is another problem. Knowing when to employ a learning machine requires adequate knowledge of the system’s limitations in a given operating environment. While misidentification may not be a war crime because it is a credible mistake resulting from errors in processing sensors’ input, the consequences often are contrary to the nation’s war aims.17 Imagine a Boomerang counter sniper robot deployed in an urban area where the population has been evacuated, but after a period of quiet civilians begin returning. Because of the Boomerang’s limited sensor and processing capabilities, it may no longer be an appropriate weapon in an environment of civilians and insurgents. If this is known, then the person sending the machine into battle could be liable, as has been understood since the High Command Case of 1948, and as was reiterated recently in the Rome Statute.18 People using these systems must have a deep understanding and awareness of the dynamic battlefield and how the environment affects the learning machines.

Issuing orders to a learning machine may seem straightforward but is actually quite complex. In the film 2001: A Space Odyssey, this dynamic was illustrated when the computer HAL 9000 resolved the dilemma of conflicting orders by killing its human crew. Although an extreme example, this illustrates that humans must be circumspect and liable for the orders they give.

Rules of Employment

The following recommendations establish a baseline for the effective, responsible employment of fully autonomous learning machines in combat, while anticipating potential legal issues:

• Establish doctrine on which to base the training of a cadre of military and civilian specialists in the employment and maintenance of learning machines. Humans always must be the first layer above machines in the chain of command.19 Never should technology be allowed to establish or modify parameters (including rules of engagement) in which life and death orders are given to other machines.

• Once principles have been agreed upon, along with a set of guidelines for the manner in which the systems are to be employed, train a group of experts who can ensure desired outcomes.

• Establish professional standards for the programmers of AI systems so that liability and malpractice legal structures may be created.

• Consult closely with allies and international institutions such as the International Criminal Court. The formulation of frameworks requires discussion to work toward a general consensus on the use of learning machines in war. These exchanges may inevitably lead to exposing differences between direct knowledge and “should have known” obligations for command responsibility per Protocol I of the Geneva Conventions.20

• Ensure secure and nationally controlled sources of kill-chain processing hardware and code for the programming to prevent potential cyber backdoors.

• Establish a compensation fund to mitigate litigious harm to a growing industry with national-security implications. Creation of this fund should incentivize technological advances and ensure that the industry remains accountable for negligence. It should be built on a framework similar to the one protecting airlines from 9/11 wrongful-death damages. The model also has been recommended for medical nano robots.21

Learning machines provide an opportunity to lessen unnecessary combatant death and destruction by vastly improving precision and accuracy. As nonethical and unemotional entities, they also may prevent revenge killings and atrocities. They can reduce the impact of relative troop strength while distancing warriors from combat. On the downside, this same distance blurs the distinction between civilian and military participants.22 In addition, failure to properly manage advancements in AI could result in deploying autonomous machines prematurely. The resulting carnage would undermine the benefits that AI offers on the battlefield. But we can still avoid the historical problem of employing revolutionary new weapons without thoroughly weighing the consequences. As learning machines become an essential element in combat, the ethical basis on which war is fought and how we fight must undergo renewed analysis, scrutiny, and validation.

1. P. W. Singer, “Robots at War: The New Battlefield,” Wilson Quarterly, Autumn 2008.

2. Definition of artificial intelligence (AI) is the science of endowing programs with the ability to change themselves for the better as a result of their experiences. Roger Schank, “What Is AI, Anyway?” AI Magazine, vol. 8, no. 4 (1987).

3. Stephen Dycus, Arthur L. Berney, William C. Banks, and Peter Raven-Hansen, National Security Law, 4th ed. (New York: Aspen Publishers, 2007), 392–93.

4. National Security Strategy, May 2010.

5. James Baker, In the Common Defense: National Security Law for Perilous Times (New York: Cambridge University Press, 2007), 215–16.

6. Protocol I to the Geneva Conventions of 1949 (1977) 1123 U.N.T.S. 3, article 48, 57.4 and 51.4. Yoram Dinstein, The Conduct of Hostilities under the Law of International Armed Conflict, 2d ed. (New York: Cambridge University Press, 2010), 62–63.

7. Richard Clarke, Cyber War: The Next Threat to National Security and What to Do about It (New York: HarperCollins, 2010), 81–83.

8. Armin Krishnan, Killer Robots: Legality and Ethicality of Autonomous Weapons (Aldershot, UK: Ashgate, 2009), Kindle e-book.

9. Katherine D. Mullens et al., “An Automated UAV Mission System,” SPAWAR Systems Center, San Diego, http://www.spawar.navy.mil/robots/pubs/usis03aums.pdf.

10. Krishnan, Killer Robots.

11. P. W. Singer, Wired for War: The Robotics Revolution and Conflict in the Twenty-first Century, (New York: Penguin Press, 2009), Sony e-reader.

12. Krishnan, Killer Robots.

13. Clarke, Cyber War, 85–95.

14. Article 28(1) of the Rome Statute defines “effective control” as resting with the commander who wields effective exercise of power of control over subordinates committing war crimes.

15. Dinstein, The Conduct of Hostilities, 279.

16. Bert-Jaap Koops, Mireille Hildebrandt, and David-Olivier Jaquet-Chiffelle, “Bridging the Accountability Gap: Rights for New Entities in the Information Society?” Minnesota Journal of Law, Science, and Technology, vol. 11, no. 2 (2010): 497–561.

17. Dinstein, The Conduct of Hostilities, 280.

18. Ibid., 271, 276.

19. Michael N. Schmitt, “War, Technology and the Law of Armed Conflict,” The Law of War in the 21st Century: Weaponry and the Use of Force, International Law Studies, vol. 82 (Naval War College, 2006): 148, 163–65.

20. Harvey Rishikof, “Institutional Ethics: Drawing Lines for Militant Democracies,” Joint Force Quarterly 54 (3rd quarter 2009).

21. Shalyn Morrison, “The Unmanned Voyage: An Examination of Nanorobotic Liability,” Albany Law Journal of Science and Technology, vol. 18 (June 2008).

22. Schmitt, “War, Technology and the Law of Armed Conflict,” 157–62.

Captain Sadler was most recently assigned to the Navy Asia Pacific Advisory Group at the Navy staff. Prior to that, he was a senior assistant to Commander U.S. Pacific Command, where he was lead for Maritime Strategy and Policy and coordinated execution of Rebalance to the Asia-Pacific. A foreign area officer and submariner, he served on attack and ballistic missile submarines as flag lieutenant to Commander Seventh Fleet in Japan, and on the CNO staff advising on Asia-Pacific issues. He graduated from the U.S. Naval Academy in 1994, was a 2005 Olmsted Scholar in Japan, and is a 2011 distinguished graduate of the National War College.